A 360° Overview of Autonomous Testing

Testaify transforms testing from a reactive process into an AI-native, continuously learning system that discovers, tests, and reports on your web app.

TABLE OF CONTENTS

How Testaify Works: A 360° Overview of Autonomous Testing

Most teams are now utilizing AI tools to supplement their development efforts, but the testing bottleneck is growing as coding speeds up. Testaify helps teams keep up!

Over our five-part “How Testaify Works” series, you can learn how Testaify launches, discovers, tests, reports, and visualizes application quality. What emerges is a vision of testing not as a reactive, manual chore—but as a continuously learning system that catches problems early and helps teams make smarter decisions.

1. Running Test Sessions — The Launch Point

The journey begins with test sessions, where you quickly set up Testaify’s AI workers to interact with your application. You start by adding your web app to Testaify. Simply provide the application’s base URL, authentication details, and user credentials based on common user roles.

Best practices include testing in your staging environment and having a proportional number of user roles to what you experience in your live environment.

Once added, your app becomes the foundation for every test session—Testaify automatically references its configuration and access rules so you can launch new sessions in seconds. A user configures a session (naming it with a team-friendly convention), selects a test type, sets whether console errors should be flagged, and hits “Start.”

Testaify supports three session types:

- Smoke: fast, 30-minute exploration with limited interactions per page

- Sanity (Middle): balanced, 2-hour exploration with moderate interaction

- Regression: deep testing for up to 6 hours, unlimited page actions

These presets control how aggressively the system explores (discovery max time) and how many actions it performs per page. By making informed choices, teams can strike a balance between speed and coverage. Best practices include starting small (smoke), using consistent naming conventions, scheduling longer runs off-hours, and systematically reviewing results.

2. AI-Powered Application Discovery — Learning Your App

Once the session begins, Testaify doesn’t rely on static scripts—it discovers your app. Our AI discovery engine intelligently crawls and explores, building a dynamic model of your web app’s structure, states, transitions, and business context.

During discovery, Testaify:

- Combines traditional AI (structured crawling, link traversal) with generative AI (contextual understanding) to build a richer model

- Uses domain awareness and guardrails to avoid external links or third-party systems not relevant to your application

- Takes an adaptive approach to the test settings: smoke sessions are quicker and more focused, regression explorations deeper and more expansive

The result of AI-native discovery is a visual application model, where nodes represent application states and edges represent transitions or interactions. You can inspect this map to see how Testaify “understands” your app.

This discovery phase is foundational, as it frees teams from the need to hand-craft test scripts or supply detailed documentation. The model serves as a springboard for intelligent test design.

3. How Testaify Designs and Executes Intelligent Tests

Armed with the application model, Testaify shifts into test design and execution. It doesn’t just fire off random interactions—it uses methodologies informed by AI to craft meaningful coverage.

Some of the techniques include:

- State Transition Coverage: ensuring each discovered state and link between states is tested

- Basis Path Coverage: covering the independent logical paths through your application, including branching logic

Critically, Testaify is role-aware. The test engine utilizes the user roles you set up, ensuring that each persona’s permitted workflows are validated and that access controls and role-specific logic are appropriately handled.

Execution is parallelized with up to 10 “worker bees,” enabling multiple test scenarios to run concurrently and reducing overall runtime while preserving coverage.

The future roadmap includes adding techniques such as boundary value testing, decision table testing, and equivalence partitioning, all built upon the existing model-driven foundation.

4. Providing Insights Through Findings — From Test Data to Decisions

Running tests is only half of the story. What sets Testaify apart is its Oracle AI engine, which digests raw test results and surfaces them as findings, not defects. The choice of term matters: “findings” respect that sometimes what looks like a bug may be intentional behavior. Teams must interpret context and make informed judgments.

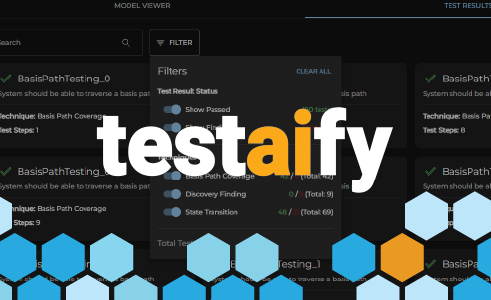

The Test Results interface is your command center. It sits alongside the model viewer and lets you browse, filter, and triage findings.

Highlights include:

- Intelligent filtering: narrow down the view by test technique, passes, fails, etc., so each stakeholder sees what matters most

- Multiple views: list or card/visual views to suit different working styles

- Deep dive with replay: for any finding, you can see the test steps, but also a video replay of what the test engine “saw” during execution. That provides context, timing clues, visual anomalies, and so on

- Mapping back to the model: each finding is connected to the underlying application model. You can see precisely where the issue occurred in your app

This blending of domain, narrative, replay, and model context transforms surface-level errors into actionable insights that developers can efficiently act on.

5. Monitoring Testing with Testaify’s Dashboard — Seeing the Big Picture

Finally, Testaify surfaces all its testing intelligence in a unified Dashboard that gives leadership and engineering teams a clear, actionable overview.

The dashboard provides:

- High-level views of testing coverage across applications, sessions, severity distributions, and quality status

- Visual analytics (charts, trends) to help you see how quality evolves over time

- Application-level drill-downs to examine findings in specific components or modules

- Trend analysis to surface recurring problem areas or regression

- Empowerment of data-driven decision making: you don’t have to guess whether quality is improving — the data tells you

With this visibility, organizations can monitor not just “are there bugs?” but “is our quality improving or declining?” and allocate resources accordingly.

Why Testaify Matters & What You Get

Together, these five blogs tell a story of autonomous, intelligent, and adaptive testing. You get:

- Test session control to choose speed vs depth

- Automatic discovery of your app, without scripting or documentation

- Test design and execution driven by AI and role-based logic

- Insights and findings you can immediately act on, with context and replay

- Dashboards for oversight, trends, and data-driven planning

This is not just the next era of automation — it's AI-native autonomous testing in a system that learns your app, adapts to changes, and surfaces meaningful results to your team.

If you're ready to move from reactive testing to a more innovative, continuous, AI-infused approach, see Testaify in action! Book a brief walkthrough with us to see intelligent testing in action.

About the Author

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Take the Next Step

Testaify is in managed roll-out. Request more information to see when you can bring Testaify into your testing process.