The Testing Evolution Problem

Modern software testing is trapped in a velocity and expertise mismatch that incremental “AI-assisted” tools can’t fix, making a fundamental shift to AI-driven testing inevitable.

TABLE OF CONTENTS

How AI is Changing Software Testing, Part 1

The testing industry is stuck in a velocity crisis. AI promises transformation, but first, we need to understand why traditional approaches can't keep up with modern development.

The Velocity Mismatch

I remember a conversation with my team's QA lead when I started working at a SaaS company about ten years ago. The team was releasing weekly. The testing team was running a regression suite that took three days to complete manually. When I asked how they handled this, the QA lead said something I've heard many times since: "We just test what we think is most risky and hope nothing else breaks." I asked her, “How much time will the team need to truly test the product before releasing it?” She answered, “Five weeks.”

That is the reality of software testing at many organizations.

Development velocity has transformed over the last two decades. We moved from waterfall release cycles measured in months or quarters to continuous deployment measured in hours or days. The emergence of cloud computing, microservices, and API architectures accelerated this shift. Agile methodologies promised to align all parts of the development lifecycle, but testers got left behind.

Here is the uncomfortable truth: while development speed increased significantly, testing speed remained essentially the same. You can add more developers to build features faster. You can scale infrastructure horizontally to handle more load. But testing? Testing still depends on humans designing test cases, humans writing automation scripts, and humans maintaining those increasingly fragile scripts as the application evolves.

The math does not work. If your team releases twice as often, you need twice as much testing in half the time. That is a 4x increase in testing capacity. Most organizations respond by doing one of two things:

- Reduce test coverage: Testing only the "critical paths" and hoping for the best. This approach leads to escaped defects, angry customers, and emergency hotfixes that disrupt the entire development cycle.

- Slow down releases: Waiting for testing to catch up defeats the entire purpose of moving to continuous delivery in the first place.

Neither option is sustainable. The velocity mismatch creates a perpetual state of technical debt in testing. Teams accumulate untested code paths, postponed test automation, and documented unfixed bugs. Add the productivity increases to coding thanks to AI, and eventually, the weight of this debt collapses the entire quality process.

The Expertise Bottleneck

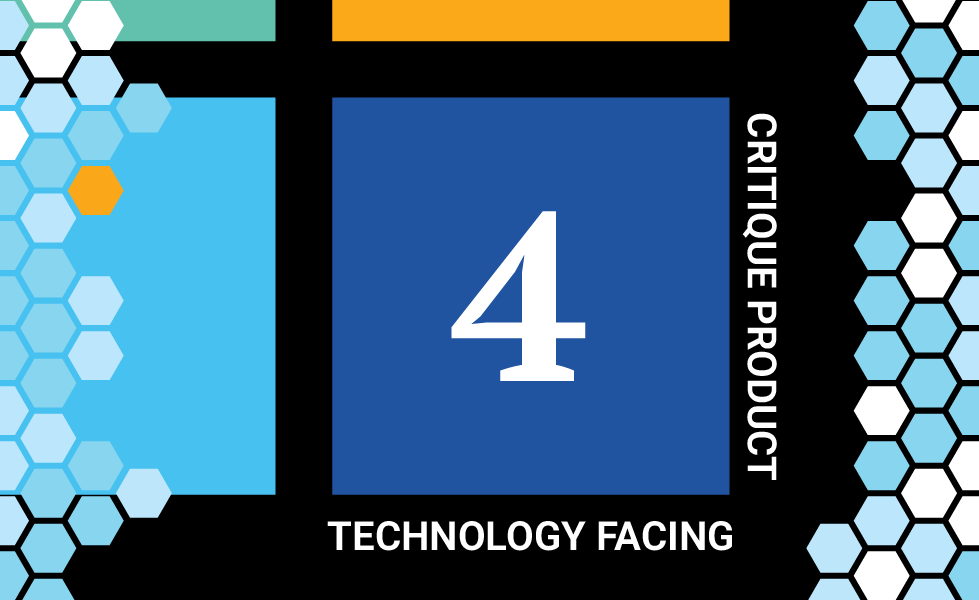

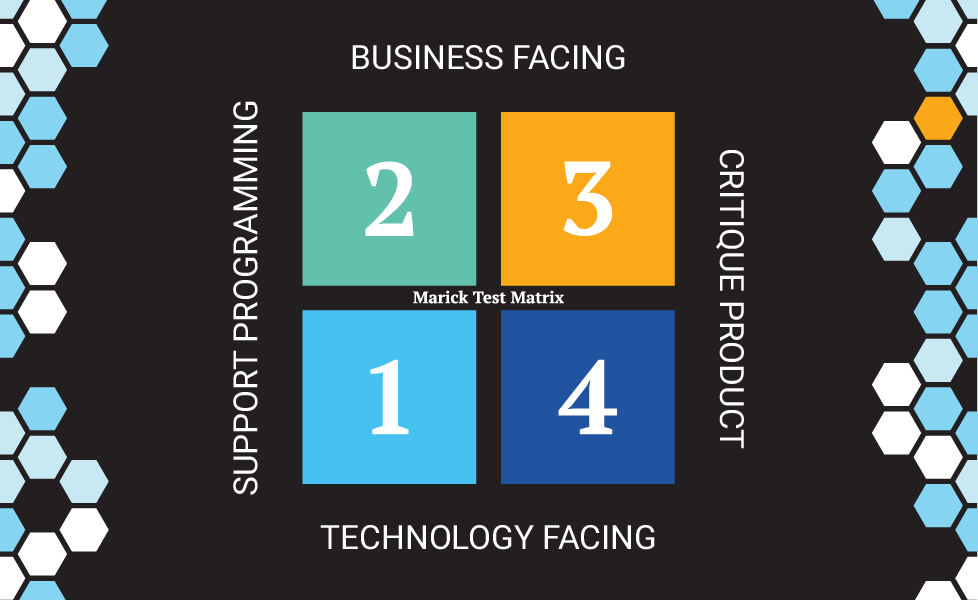

Let's talk about what it actually takes to build a comprehensive testing strategy. In my blog post about Brian Marick's testing quadrants, I outlined the four critical areas every testing strategy must address:

Covering all four quadrants requires different skills, different tools, and different mindsets. Quadrant 1 (unit testing) needs developers who understand testing and ideally TDD. Quadrant 2 (business-facing support) involves collaboration between product managers, UX designers, and engineers, ideally using BDD. Quadrant 3 (business-facing critique) demands test architects who know exploratory testing techniques, boundary value analysis, equivalence partitioning, and dozens of other methodologies. Quadrant 4 (technology-facing critique) requires specialists in performance and security testing, among other areas.

How many organizations have this expertise readily available? Very few.

Many QA teams I have worked with focus primarily on Quadrant 3, running manual tests through the UI. Some have basic test automation, usually fragile Selenium scripts that break every time the UI changes. A minority practice BDD or has dedicated performance engineers. Comprehensive testing across all four quadrants? That is rare.

Even when organizations try to build this capability, they face a critical problem: the software testing talent pool is limited. Finding experienced test architects who understand the full range of testing methodologies is difficult. Finding automation engineers who can write maintainable test frameworks is equally challenging—and finding people who can do both? Well, good luck.

The expertise bottleneck creates a fundamental constraint on testing quality. You cannot test better than your team's collective knowledge allows. You cannot implement testing techniques that your team does not know exist. You cannot build comprehensive test suites without comprehensive testing expertise.

This situation is not a training problem you can solve by sending people to a few conferences. It takes years to develop deep testing expertise. The industry has been trying to solve this through better education, certification programs, and knowledge sharing. Those efforts help, but they do not change the fundamental equation: expertise is scarce, expensive, and slow to develop.

The "AI-Assisted" Illusion

The testing tool market saw this problem coming and responded with "AI-powered" solutions. Over the last ten years, dozens of tools emerged claiming to revolutionize testing with artificial intelligence. Most of them are selling a mirage.

Let me be specific about what I mean. Many current "AI testing tools" fall into one of these categories:

Test Automation Helpers

These tools use AI to make existing test automation slightly less fragile. They might have smarter element locators that adapt when the UI changes, or they might suggest assertions based on common patterns. That is helpful, but it does not change the fundamental workflow. A human still needs to:

- Decide what to test

- Design the test cases

- Write the automation scripts

- Maintain the test suite as the application evolves

The AI just makes step 4 a little less painful. You are still doing 95% of the work manually.

Codeless Automation Platforms

These promise that anyone can create automated tests without writing code, sometimes using record-and-playback features enhanced with AI. If you have been in testing for more than five minutes, you know that "record and playback" is one of the most despised phrases in the industry. It does not work at scale. The tests it produces are brittle and unmaintainable, and they miss the entire point of good test design.

Adding AI to record-and-playback does not fundamentally change this. You still need humans to design meaningful test scenarios, understand application behavior, and interpret results. The "codeless" promise just hides the complexity rather than solving it.

Smart Test Maintenance Tools

These tools use ML to detect when tests fail due to application changes versus actual defects. They might automatically update test scripts when UI elements move. That helps with a real problem—test maintenance is expensive—but it is treating a symptom rather than the disease.

The disease is that we are asking humans to do work that scales exponentially while their capacity scales linearly. Making that work 20% more efficiently does not solve the velocity mismatch. You need an order-of-magnitude improvement, not incremental gains.

Here is what these "AI-assisted" tools cannot do:

- They cannot discover your application. A human still needs to understand the application structure, identify all the possible paths, and document what exists.

- They cannot design comprehensive test cases. A human still needs to apply testing methodologies, understand business requirements, and think through edge cases.

- They cannot determine what quality means. A human still needs to define acceptance criteria, prioritize testing efforts, and make release decisions.

In other words, they automate execution (the easy part) but leave the hard parts—discovery, design, and decision-making—entirely to humans. That is why I call it an illusion. These tools are not transforming testing; they are optimizing the edges of an outdated model.

Why This Matters Now

Some of you reading this might be thinking: "We have managed this way for years. Why does it need to change now?"

Fair question. Let me give you three reasons:

1. The stakes are higher. When software was primarily on-premises and released quarterly, you had time to fix quality issues before they reached customers. Today, with SaaS delivered continuously and customers expecting zero downtime, a single bad release can destroy retention, generate viral negative publicity, and cost millions in lost revenue. The cost of defects has never been higher.

2. Complexity is accelerating. Modern applications are not monoliths. They are distributed systems with microservices, third-party integrations, mobile apps, APIs, and complex state management. The number of possible combinations to test grows exponentially with each new service or integration point. Traditional manual testing cannot possibly keep up with this complexity curve.

3. Expectations are rising. Users compare your application to the best software they use, not to competitors in your industry. If they experience seamless, bug-free interactions with major tech companies, they expect the same from your product. The bar for "acceptable quality" keeps rising, while your testing capacity remains constrained.

The combination of these three forces—higher stakes, accelerating complexity, and rising expectations—creates an unsustainable situation. You cannot release slower (competitive pressure prevents it), you cannot hire enough testing expertise (it is too scarce and expensive), and you cannot dramatically reduce test coverage (the risk is too high).

Something has to give. That something is the fundamental model of how testing works.

In Part 2 of this series, we will explore what AI actually changes in the testing workflow—not AI as a helper tool, but AI as the primary agent conducting autonomous testing end-to-end. We will examine the capability shift that makes this possible and what it means for how teams approach quality.

About the Author

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Take the Next Step

Testaify is in managed roll-out. Request more information to see when you can bring Testaify into your testing process.