Providing Insights Through Findings

Testaify’s Oracle AI engine transforms raw test results into actionable insights, enabling teams to make informed decisions about application quality.

TABLE OF CONTENTS

How Testaify Works: Providing Insights Through Findings

In our previous blog post, we explored how Testaify designs and executes intelligent tests, covering the sophisticated process of test creation and execution. Today, we're diving deeper into what happens after those tests run—specifically, how Testaify's Oracle AI engine evaluates results and presents them as actionable findings that empower your team to make informed decisions about the quality of your application.

The Philosophy Behind Findings vs. Defects

Before we explore the technical capabilities of Testaify's results system, it's essential to understand our philosophical approach to test outcomes. As we detailed in our earlier post about findings versus defects, Testaify deliberately uses the term "findings" rather than "defects" or "bugs."

This distinction isn't merely semantic—it reflects a fundamental understanding of modern software development. What our Oracle AI engine identifies as a potential issue might actually be an intentional design choice requested by your customer or stakeholder. A button that appears misaligned, a workflow that seems counterintuitive, or a validation that seems overly strict could all be deliberate decisions based on specific business requirements, user feedback, or regulatory compliance needs.

By presenting these observations as findings rather than defects, Testaify empowers your team to make the critical judgment call about whether each item requires action. This approach respects the complexity of modern applications and acknowledges that only humans can fully understand the context and intent behind design decisions.

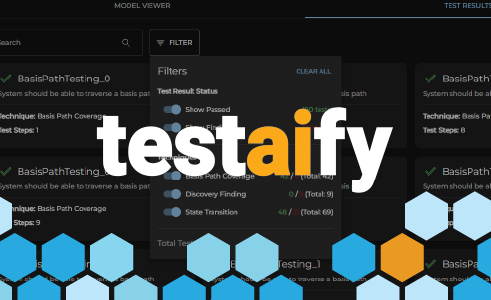

Navigating Test Results: Your Command Center

Once Testaify completes its test execution, all findings are accessible through the Test Results tab, conveniently located next to the Model Viewer tab. This interface serves as your command center for understanding what the Oracle AI engine discovered during testing.

The Test Results section is designed with efficiency in mind, recognizing that development teams need to process and prioritize findings quickly and effectively. Whether you're a QA engineer triaging issues, a developer investigating specific problems, or a product manager understanding overall application quality, the interface adapts to your workflow needs.

The Power of Intelligent Filtering

While Testaify provides basic search capabilities for quick lookups, the real power lies in the Filter functionality. This sophisticated filtering system enables you to refine your findings in ways that align with your team's priorities and processes.

The Filter goes beyond simple keyword matching. You can narrow your results by selecting specific testing techniques, allowing you to focus on particular aspects of your application.

This granular filtering capability enables different team members, such as QA engineers, developers, and product managers, to view the same test run through their specific lens of concern, making the findings immediately relevant and actionable for their respective roles.

Multiple Views for Different Needs

Recognizing that different team members have varying preferences for consuming information, Testaify provides both visual and tabular views of test results.

This flexibility ensures that whether you're a detail-oriented analyst who prefers spreadsheet-style data review or a visual learner who benefits from graphical representations, Testaify accommodates your working style, making it a versatile tool for all team members.

Deep Dive Capabilities: Understanding Each Finding

When you select a specific finding for detailed review, Testaify provides multiple ways to understand exactly what happened during the test. You can read through the detailed steps that led to the finding, giving you a straightforward narrative of the Oracle AI's testing process.

But reading steps is just the beginning. The replay functionality offers something truly powerful: you can watch a video recording of the actual test execution. This visual playback shows you exactly what the Oracle AI "saw" and did, providing context that static screenshots or text descriptions simply cannot match.

Watching these replays often reveals nuances that might be missed in written descriptions—subtle timing issues, visual artifacts that only appear under certain conditions, or interaction patterns that contribute to the finding. This video evidence transforms findings from abstract reports into concrete, observable behaviors.

Connecting Findings to Your Application Model

One of Testaify's most innovative features is its ability to connect each finding back to your application model. When reviewing a specific finding, you can see exactly which part of your application model was involved in generating that result.

This model integration serves multiple purposes. First, it provides immediate context about where in your application the finding occurred. Second, it allows you to use the model itself as a navigation tool, helping you understand the broader context of the finding within your application's structure.

This connection between findings and the underlying model creates a feedback loop that enhances your understanding of both your application's behavior and the effectiveness of your testing approach.

Conclusion: From Data to Decisions

Testaify's approach to presenting test results transforms raw testing data into actionable intelligence. By presenting findings rather than defects, offering flexible filtering and viewing options, providing detailed replay capabilities, and connecting results back to your application model, Testaify ensures that your team can quickly understand, prioritize, and act on testing outcomes.

The ultimate goal isn't just to identify potential issues—it's to provide your team with the context and tools necessary to make informed decisions about the quality and readiness of your application for release.

In our next blog post, we'll explore how Testaify's continuous learning capabilities help improve testing effectiveness over time, creating an ever-smarter testing companion for your development process.

About the Author

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Take the Next Step

Testaify is in managed roll-out. Request more information to see when you can bring Testaify into your testing process.