We Need the Test Cup Model Now

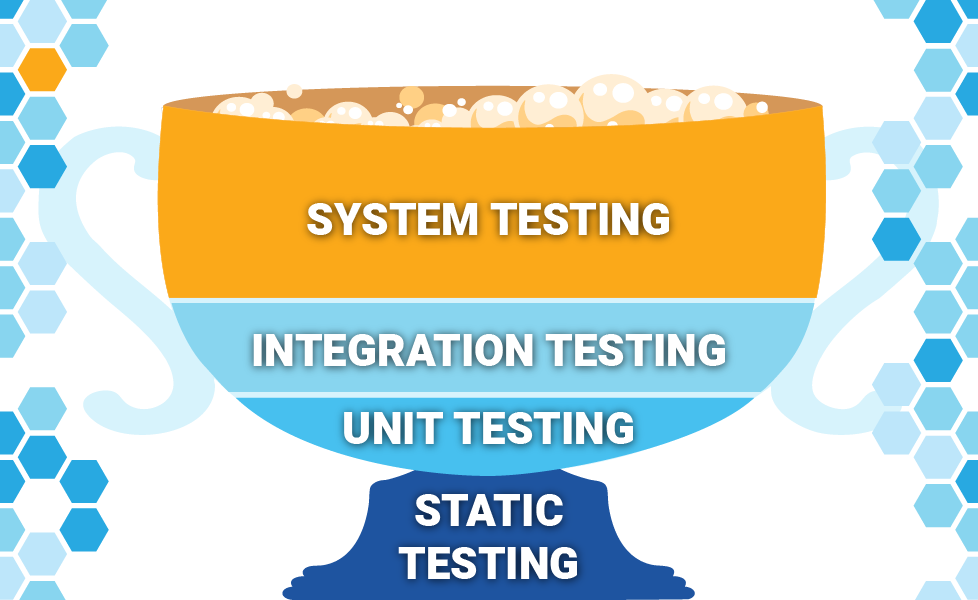

The Test Cup Model highlights the renewed importance of system testing in an AI-driven world, where both human and agentic users influence how software behaves.

TABLE OF CONTENTS

Reimagining System Testing for the Age of AI with the Test Cup Model

In a recent blog post, we introduced the Test Cup Model:

In this post, we want to explain why we believe it is time for such a model. We know, as George Box said, all models are wrong, but some are useful. But more importantly, they are helpful in specific contexts.

Test Models and Their Contexts

As we mentioned in our post, a consensus is emerging that the usefulness of the test pyramid model ended some time ago. The test pyramid, while effective in emphasizing the importance of unit testing, falls short when it comes to validating a system for users. This model minimizes the importance of system testing, which is crucial for ensuring the product meets user expectations. As soon as people started implementing the test pyramid and minimizing the importance of system testing, they crashed directly into the harsh reality of having users.

I mentioned that one time I worked with a team where the only tests in their CI/CD pipeline were unit tests. Even when all tests passed, the product was never ready for release. I hope no one misinterpreted the importance of unit testing. Having unit tests is very useful to improve the quality of the product. Only having unit tests is not. Having a lot of unit tests and very few system tests is not.

Still, the testing pyramid helped a generation of engineers to learn the importance of unit testing. As such, it achieved something of value for the community.

We also mentioned the test trophy. The test trophy comes from a particular context. It is popular among teams working on new cloud-based products that utilize API-based architecture patterns, such as microservices. Additionally, many of these teams tend to use interpreted programming languages instead of compiled ones. Not surprisingly, they thought of adding static testing to their model.

The model’s focus on integration tests makes a great deal of sense in an API-driven world. Integration testing is the most helpful level when evaluating API endpoints. While the diagram we showed of the test trophy model suggests a significant amount of system tests, that diagram is not the only one around regarding the test trophy model. As such, the only thing we can do is go back to the source and evaluate the model presented in the blog post by Kent C. Dodds.

The diagram shown below, as you can see, considerably reduces the significance of system testing (End-to-End).

Source: https://kentcdodds.com/blog/static-vs-unit-vs-integration-vs-e2e-tests

This version of the model is problematic as it faces the same issues as the test pyramid—too few system tests. Products developed using this model will experience, to a lesser degree, similar challenges to the ones created by teams using the test pyramid.

We Need System Testing

For anyone who's spent a significant amount of time testing software products, the importance of system testing is crystal clear. It's the only way to ensure that the product will meet users’ expectations. While all three levels of testing are necessary, system testing is the most crucial.

As such, we proposed the Test Cup Model, a model that incorporates significant system tests to evaluate users' requirements, ensuring it meets users’ expectations. It includes integration tests to ensure that the API endpoints correctly implement the business logic, and it features a smaller number of unit tests to assist developers as they make changes to the codebase.

We Need the Test Cup Model

AI will enable us to address the challenges of system testing. With AI, we can automate the discovery of a web application, design system tests for it, automatically execute those tests, and finally report back the results. This AI solution will eliminate the reliance on fragile test automation scripts and lengthy regression testing sessions, making the testing process more efficient and effective.

But there is another reason we need more system testing that most people ignore. While AI enables new tools we did not have before, it also allows new ways of using the system we did not have before. The emergence of Agentic AI means not only humans using your applications, but also AI agents. For certain use cases, AI agents will likely become the primary users shortly. Although the use of AI agents in your application and API is not yet fully understood, one thing is sure: it will reveal new issues with your product.

What we do know is that system and integration testing will be more important than unit testing in this new context. We need a model to reflect this new world.

The Future of Testing is Here! It is time to sign up for Testaify!

About the Author

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Take the Next Step

Testaify is in managed roll-out. Request more information to see when you can bring Testaify into your testing process.