The Uncomfortable Truth About Q4

When teams postpone the performance, security, and scalability testing of Quadrant 4, they trade short-term speed for costly production problems.

TABLE OF CONTENTS

- Why Non-Functional Testing is Always Left for Last (And Why It Costs You)

Why Non-Functional Testing is Always Left for Last (And Why It Costs You)

Ask most teams about their testing strategy, and they'll happily walk you through their unit tests, acceptance criteria, and exploratory testing sessions. But ask about non-functional testing - performance, security, scalability - and watch them squirm.

"Oh yeah, we'll definitely test that... before we launch."

Spoiler alert: they won't.

Welcome to Quadrant 4, the most neglected part of any testing strategy.

Quick Context: What Is Quadrant 4?

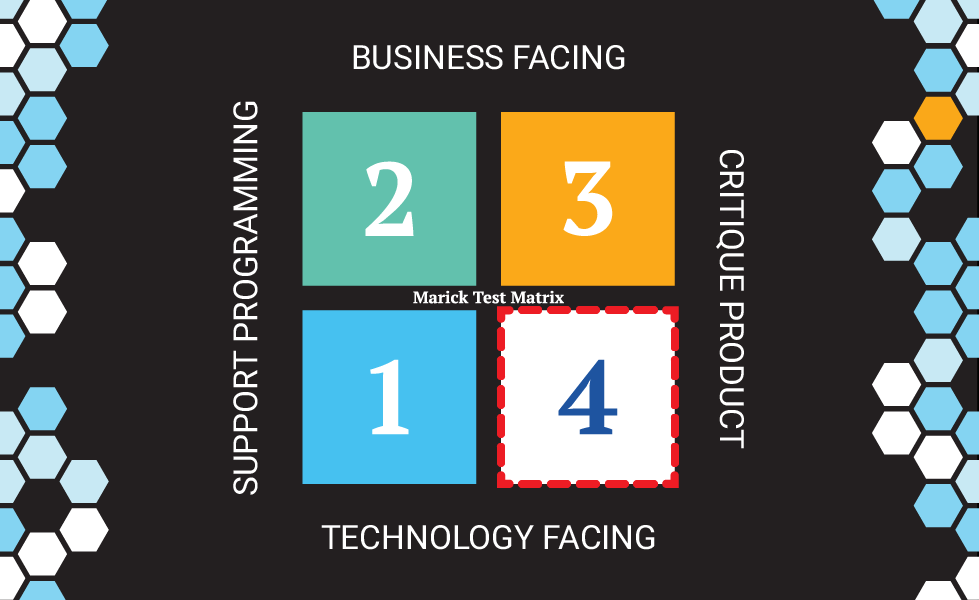

If you're not familiar with Brian Marick's Agile Testing Quadrants, here's the quick version: Marick created a framework that maps different testing types across two dimensions.

One axis goes from "supporting the team" (helping developers build the right thing) to "critiquing the product" (validating what was built). The other axis goes from "technology-facing" (internal quality) to "business-facing" (user-visible quality).

This creates four quadrants:

- Q1: Technology-facing tests that support the team (unit tests, component tests)

- Q2: Business-facing tests that support the team (acceptance tests, BDD scenarios)

- Q3: Business-facing tests that critique the product (exploratory testing, usability testing)

- Q4: Technology-facing tests that critique the product (performance, security, load, scalability)

Quadrant 4 is where you validate the non-functional aspects of your system - the technical qualities that determine whether your application can actually survive production.

And it's almost always the quadrant teams ignore until it's too late.

What Q4 Actually Promises (And What Usually Happens)

Quadrant 4 is supposed to be where you rigorously test the non-functional aspects of your application: Can it handle production load? Does it scale? Is it secure? Will it stay fast under pressure?

These are the "-ilities" that determine whether your application survives contact with real users or becomes a cautionary tale on Hacker News.

In theory, Q4 testing happens throughout development. You're continuously validating performance, checking security, and stress-testing components.

In reality? Q4 is the last thing teams do, the first thing they cut when deadlines hit, and the hardest thing to execute well.

Why "We'll Test That Later" Always Backfires

Here's the problem: Q4 testing is expensive and inconvenient.

Performance testing needs production-like environments. Security testing requires specialized tools and expertise. Load testing involves infrastructure capable of simulating thousands of users hitting your system simultaneously.

All of this costs money and time that teams convince themselves they don't have right now.

So they make a bet: "Let's focus on getting features working first. We'll do the performance and security stuff before launch."

This bet fails in predictable ways.

Version 1: The deadline arrives, features barely work, and someone says, "We'll monitor it in production and fix issues as they come up." Then production load hits, your database melts down, and you're firefighting in front of angry users instead of fixing problems in a controlled environment.

Version 2: You actually run Q4 tests two weeks before launch and discover your system can't handle more than 50 concurrent users, or there's a massive security hole in your authentication, or memory leaks crash everything after a few hours.

Now you are throwing hardware to the problem increasing your infrastructure costs significantly. You try to do emergency architectural surgery under deadline pressure. The decisions that caused these problems were made months ago and are woven throughout your codebase. What could have been caught with small, incremental tests throughout development now requires panicked redesigns and all-hands-on-deck war rooms.

The "later" mentality doesn't save time. It just moves all your pain to the worst possible moment.

Q4 Isn't About Running Tools - It's About Understanding Results

Here's where teams really misunderstand Q4: they think it's just about running tools.

"We'll fire up JMeter, blast the API with requests, and we're done."

"We'll run a security scanner, get a report, ship it."

Wrong.

Running the tools is the easy part. Any junior developer can configure a load testing tool. The hard part is figuring out what the results mean and what to do about them.

Your load test shows response times degrading at 3,000 concurrent users. Great. Now what? Is it a database bottleneck? Connection pool exhaustion? Inefficient queries? Lock contention? A third-party API that can't keep up? Bad caching strategy?

Performance testing generates mountains of data: response times, throughput, error rates, CPU usage, memory consumption, database query patterns, and network latency. Making sense of this requires real engineering depth. You need to understand distributed systems, database optimization, application architecture, and how everything interacts under load.

Security testing is the same. Your DAST tool spits out a report with 47 potential vulnerabilities. Which ones actually matter? Is this SQL injection exploitable in practice or a false positive? What's the real attack surface? What mitigations are already in place?

Q4 isn't a checklist you complete. It's specialized engineering work that requires expertise.

That is the reason Q4 gets neglected - it's hard, and many teams don't have the skills to do it well. Rather than invest in building that expertise, they avoid Q4 testing and cross their fingers.

How to Actually Integrate Q4 (Instead of Pushing It to the End)

The solution isn't "do more Q4 testing right before launch." The solution is bringing Q4 thinking into your development process from day one.

Start With Micro-NFRs in Your Unit Tests

Performance isn't just a system-level problem - it starts at the component level. Don't wait until the end to run load tests. Set performance budgets for individual pieces and test them as you build.

Example: "This API endpoint must respond in under 100ms." That's a unit test. Write it. Run it in CI. Fail the build if it regresses.

"This database query must complete in under 50ms with 1,000 rows." Unit test it.

"This page must render in under 2 seconds on a throttled connection." Test it at the acceptance level (Q2).

When you treat non-functional requirements as testable constraints from the beginning, you catch performance problems while they're small and fixable. Not when they require architectural overhauls.

Make Security Continuous, Not a Pre-Launch Panic

Security can't be an audit you run two weeks before going live. It needs to be baked into your pipeline.

Add Static Application Security Testing (SAST) to your CI/CD pipeline. Every commit gets scanned for common vulnerabilities - SQL injection, XSS, insecure dependencies, and hardcoded credentials. Critical issues fail the build.

Run Dynamic Application Security Testing (DAST) against your staging deployments. Automatically probe your running application for vulnerabilities before code reaches production.

This testing doesn't replace security experts - you still need them for threat modeling and deep analysis. But by making basic security testing continuous and automated, you catch obvious problems before humans even look at the code.

Change How You Think About Q4

Shifting Q4 left isn't just about tools. It's about changing your team's mindset.

Stop treating non-functional requirements as nice-to-haves you'll address "if there's time." Make them first-class requirements with the same priority as features.

Stop treating Q4 as someone else's job. Performance and security are everyone's responsibility. Developers need to understand how their code performs under load. Testers need to think about security implications. Product managers need to define performance budgets alongside feature requirements.

And invest in the actual skills required to do Q4 well. Performance engineering and security testing are real disciplines. You can't fake expertise by running tools and hoping for the best.

The Real Cost of Q4 Neglect

Q4 failures are expensive in ways that go beyond emergency bug fixes.

Late-stage performance problems force expensive infrastructure over-provisioning or cause service outages that lose customers. Security breaches destroy user trust and trigger regulatory penalties. Scalability issues mean you can't handle growth when it comes.

Most teams know Q4 matters. They just lack the discipline to prioritize it early and the expertise to do it well.

Marick's quadrants were never meant to suggest you do Q1, then Q2, then Q3, then Q4 in sequence. They're perspectives should operate in parallel throughout development.

For Q4, that means bringing non-functional thinking into every stage - not treating it as a final gate you test right before production.

Because by the time you're testing at the gate, it's already too late to fix what matters.

About the Author

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Testaify founder and COO Rafael E. Santos is a Stevie Award winner whose decades-long career includes strategic technology and product leadership roles. Rafael's goal for Testaify is to deliver comprehensive testing through Testaify's AI-first platform, which will change testing forever. Before Testaify, Rafael held executive positions at organizations like Ultimate Software and Trimble eBuilder.

Take the Next Step

Testaify is in managed roll-out. Request more information to see when you can bring Testaify into your testing process.